Parsing StackOverflow Data Dump

Periodically, the Stack Exchange people publish a dump of the content of all public Stack Exchange sites. I played with it back in 2009 when this started, but have lost what little code I wrote back then.

I just downloaded the Sep 2011 dump. For StackOverflow alone, here are the file sizes:

total 43273296

-rw-r--r-- 1 pierce staff 170594039 Sep 7 2011 badges.xml

-rw-r--r-- 1 pierce staff 1916999879 Sep 7 2011 comments.xml

-rw-r--r-- 1 pierce staff 1786 Jun 13 2011 license.txt

-rw-r--r-- 1 pierce staff 10958639384 Sep 7 2011 posthistory.xml

-rw-r--r-- 1 pierce staff 7569879502 Sep 7 2011 posts.xml

-rw-r--r-- 1 pierce staff 4780 Sep 7 2011 readme.txt

-rw-r--r-- 1 pierce staff 193250161 Sep 7 2011 users.xml

-rw-r--r-- 1 pierce staff 1346527241 Sep 7 2011 votes.xml

Assuming each row is a line by itself, there were more than six million posts as of Sep 2011:

% egrep "row Id" posts.xml | wc -l

6479788

According to readme.txt in the dump package, the file posts.xml has the following schema:

- posts.xml

- Id

- PostTypeId

- 1: Question

- 2: Answer

- ParentID (only present if PostTypeId is 2)

- AcceptedAnswerId (only present if PostTypeId is 1)

- CreationDate

- Score

- ViewCount

- Body

- OwnerUserId

- LastEditorUserId

- LastEditorDisplayName="Jeff Atwood"

- LastEditDate="2009-03-05T22:28:34.823"

- LastActivityDate="2009-03-11T12:51:01.480"

- CommunityOwnedDate="2009-03-11T12:51:01.480"

- ClosedDate="2009-03-11T12:51:01.480"

- Title=

- Tags=

- AnswerCount

- CommentCount

- FavoriteCount

I'm not going to build a DOM tree of 6+ millions posts in RAM yet, so I'll use a SAX handler to parse the thing. First, install XMLSupport:

Gofer new

squeaksource: 'XMLSupport';

package: 'ConfigurationOfXMLSupport';

load.

(Smalltalk at: #ConfigurationOfXMLSupport) perform: #loadDefault.

As per SAXHandler's class comment, subclass it and override handlers under the "content" and "lexical" categories as needed:

SAXHandler subclass: #TnmDmSOHandler

instanceVariableNames: ''

classVariableNames: ''

poolDictionaries: ''

category: 'TNM-DataMining-StackOverflow'

For a schema as simple as the above, the method of interest is this:

startElement: aQualifiedName attributes: aDictionary

aQualifiedName = 'row' ifTrue: [ Transcript show: aDictionary keys; cr ]

Using a 1-row test set, the following do-it

TnmDmSOHandler parseFileNamed: 'p.xml'

produces this output:

#('Id' 'PostTypeId' 'AcceptedAnswerId' 'CreationDate' 'Score'

'ViewCount' 'Body' 'OwnerUserId' 'LastEditorUserId'

'LastEditorDisplayName' 'LastEditDate' 'LastActivityDate' 'Title'

'Tags' 'AnswerCount' 'CommentCount' 'FavoriteCount')

From here on, it is straightforward to fleshen startElement:attributes: to extract the stuff that is interesting to me.

To count the actual number of records, just keep a running count as each post is parsed, and print that number in the method endDocument. The run took a long time (by the wall clock) and counted 6,479,788 posts, the same number as produced by egrep'ping rowId.

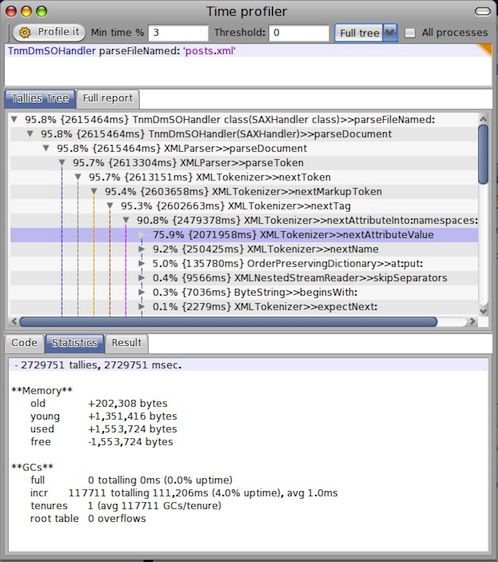

How about Smalltalk time? Let's ask TimeProfiler.

TimeProfiler onBlock: [ TnmDmSOHandler parseFileNamed: 'posts.xml' ]

Btw, saw this comment on HN: "If it fits on an iPod, it's not big data." :-)

Tags: parsing, StackOverflow, XML